What to Do When Generative AI is at Peak Hype

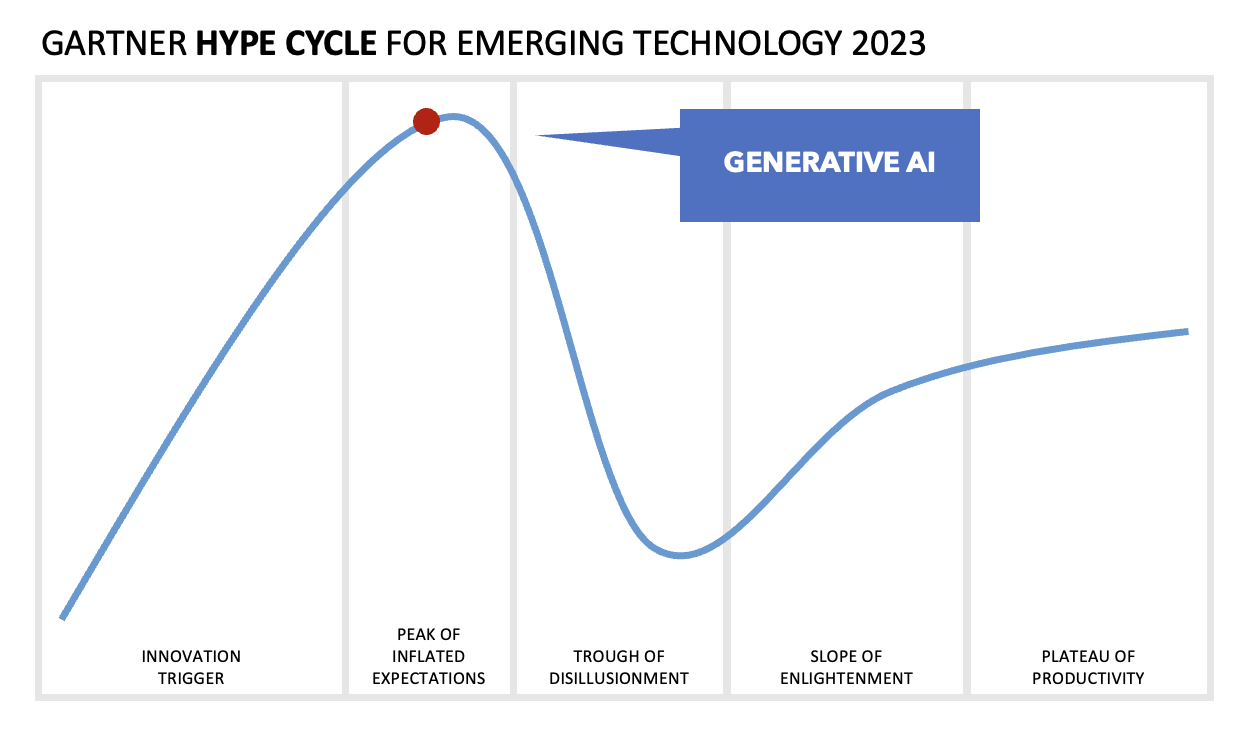

Gen AI is at the peak of Gartner's Hype Cycle and heading for the trough. But you can still solve problems with products that work today.

We’ve talked previously about Gartner’s Hype Cycle for contract technologies, but this time we’re focused on the Hype surrounding Generative AI (Gen AI for short). Not surprisingly, Gen AI is peaking right now in terms of Hype. Gartner calls this the Peak of Inflated Expectations. It’s the moment when everyone is buzzing about the disruptive potential of a new technology, perhaps frothing at the mouth with excitement. But is it going to last? Is it about to take your job? Should 44% of all lawyers be packing their bags and sharpening their barista skills?

Not so fast.

If you cast your eye to the right of the Peak of Inflated Expectations, you will discover the next phase of the Hype Cycle. It’s called the Trough of Disillusionment, and it’s where expectations for a new technology plummet to very low levels. Given that Gartner is predicting it will take 2-5 years for Gen AI to reach the Plateau of Productivity, that means we’re in for at least a year of disillusionment, perhaps several years. Why is this?

Like most new technology, Gen AI is likely to follow Amara’s law, whereby we tend to overestimate the impact of a new technology in the short run and underestimate its long run effects. Bill Gates was a famous believer in (and repeater of) Amara’s law.

One reason for this pattern is a cognitive bias known as the Dunning-Kruger Effect. People with limited domain knowledge and skills tend to overestimate their actual knowledge and skills and thus get carried away with bold predictions of disruption (without realizing it). Even people with reasonable skills tend to overestimate their abilities, but to a lesser extent. True experts, on the other hand, tend to assess their abilities more in line with their actual performance, and perhaps even under-estimate their abilities. True genius doubts itself. Right now everyone has an opinion on Gen AI and many of those opinions are over-hyped based on a partial understanding of where (and when) it will really make an impact.

The hype and excitement around Gen AI stems in large part from the fact that it is the first generalized AI to be both accessible and to create a good first impression. By accessible I mean that just about anyone can sign up and start chatting with AI like Chat GPT, and by generalized, I mean that you can chat about anything you like. And, as we all know, the chatter it throws back at you does create a good first impression. It sounds good. It’s like chatting with a real person. Quite a smart person, apparently.

But then people get carried away. With Gen AI as their hammer, everything looks like a nail. It can solve this problem and that problem. It can do just about anything. It will replace half the world’s lawyers. It will write Hollywood scripts. It will cure cancer.

No doubt it will help to solve many problems. Probably not all the problems people are thinking of right now, and perhaps many problems we’re currently overlooking. But first, it needs to be transformed from cool technology into useful product, and that tends to be much harder than it looks.

In legal, for example, hallucination is a challenge that looms large. One lawyer has already suffered the public humiliation of submitting made-up case law thanks to Chat GPT hallucinations. You can ask a Gen AI chatbot for citations to support a particular argument. It will boldly give you an answer that sounds plausible. It will often be true. But sometimes it will be made up. A complete fabrication designed to sound right, but not necessarily to be right. That’s fine for marketing slogans. If it sounds catchy, that’s all you need. But it’s not fine for legal applications where the wrong answer can land you in very hot water.

This is not to say that Gen AI has no use cases in legal. It does. But if there’s a risk of hallucination, then it should only be used with human or some other form of triangulation. It makes a good research co-pilot, for example, where the human lawyer or expert knows they need to treat the AI with a certain degree of caution.

Gen AI also has other challenges. The latest one being claims of industrial scale copyright infringement from the authors whose works were used to train the large language models (LLMs). There’s a real possibility some of these claims will threaten the viability of some LLMs in the near term.

What’s a technology buyer to do? Mostly be wary of products “powered by Gen AI” that are rushing to market. Focus instead on problems solved and good UX, rather than buzzy AI labels.

At Catylex, for example, we deliver contract analytics. The problem we solve is translating contractual text into useful data so that you can find risk and answer questions quickly. Do we use AI in the product? Yes. Are we using Gen AI? Yes, but cautiously to solve specific problems of contract analysis where it out-performs other methods. Do our customers care what flavor of AI we’re running under the covers? Not really. They want to know that it works out of the box, works well, delivers data more cost-effectively than human review, keeps the data secure, and can generate structured data that’s fit for consumption by other enterprise systems.

Don’t let the hype overwhelm you. You don’t need to understand transformers, vectors, and embeddings. Focus on the problems you need to fix and the products that can fix them today. Then try them out for yourself. If it works, buy it. If it feels like a wobbly prototype, don’t buy it. Simple, really.

P.S. There's a great back story about the Dunning Kruger study which is worth a look...